This is the first part of a planned three part series, covering using Terraform to deploy Google Cloud Functions and schedule invoking them using the Cloud Scheduler.

This first article will cover a normal, and simple, setup using Pub/Sub for the scheduling. The second part of the series is now available here.

This is actually already quite well documented in the official documentation, but it is the foundation for the following articles, so let’s start simple, shall we?

I will try to keep all examples as short and to the point as possible. That means I will skip configuring some things, such as regions and versions, where possible, leaving it up to Terraform and Google Cloud to pick the defaults for me. This is just to keep the examples focused on the subject at hand, but means that they are not production ready in any way.

All source code for this project is available at github.com/hedlund/scheduled-cloud-functions.

Prerequisites

In order to follow along with the examples, you’ll need a working Terraform installation. I’m using Terraform 0.14.4 when writing this, but anything newer should also work as well.

You also need to authenticate the Google Cloud provider somehow. One way of accomplishing this is to install the gcloud command-line tool, and then authenticating it by running:

gcloud auth application-default login

Creating a project

A lot of tutorials expect you to create a project first, using the Google Cloud Platform console, and then connecting your Terraform scripts to that project. I’m going to create the project as part of the script itself, because down the line we are going to address some peculiarities of doing inter-project configuration, so I want to have everything in my Terraform code.

In order to work with Google Cloud, we need to add the Google provider to our code, and then we can create a brand new project:

google {

}

resource "google_project" "project" {

name = "Scheduled Cloud Functions"

project_id = "hedlund-scheduled-functions"

}

There’s a number of things to note here…

First, as I mentioned in the introduction, I’ll be leaving out a lot of specifics in the examples, such as not defining a default region and similar. In your production code, you probably want to be more in control, so study the provisioner documentation thoroughly.

Second, for the same reason I am not specifying any kind of remote state backend (such as a Storage bucket), which I really recommend that you use in your projects.

Third, project IDs are globally unique, so if you’re following along, you’ll probably need to change this to your own ID.

Creating a Pub/Sub topic

When scheduling a Cloud Function, we basically have two options:

- Using a Pub/Sub topic

- Using an HTTP trigger

You can connect your functions to other type of events as well, but when it comes to scheduling these are the two main alternatives. In this first part of the series, we’re going to focus on the Pub/Sub trigger, as that is the easiest way to configure scheduling.

In order to trigger the function, we need to enable the Pub/Sub service and create a topic that our function can listen to:

resource "google_project_service" "pubsub" {

project = google_project.project.project_id

service = "pubsub.googleapis.com"

}

resource "google_pubsub_topic" "hello" {

project = google_project.project.project_id

name = "hello-topic"

depends_on = [

google_project_service.pubsub,

]

}

We add the

depends_oncoupling to help Terraform understand that the Pub/Sub service must be created before it creates the topic.

Once the topic is created, we can deploy the function.

Creating a Cloud Function

As the function itself is not the focus of this article, I’m just going to deploy a simple Hello World. I’m going to use Go, but of course you can write your function in any of the supported languages.

The type of trigger defines the signature of your cloud function. In the case of a Pub/Sub trigger, it should look something along the lines of:

package hello

import (

"context"

"log"

)

type PubSubMessage struct {

Data []byte `json:"data"`

}

func HelloPubSub(ctx context.Context, m PubSubMessage) error {

name := string(m.Data)

log.Printf("Hello, %s!", name)

return nil

}

I’m not going to cover much of what we’re doing here as it’s basically straight out of the reference documentation.

I’m going to use Terraform to deploy the function as well, but you could deploy the function separately (using some kind of CI/CD pipeline perhaps), and then configure only the scheduling parts using Terraform.

Deploying Cloud Functions using Terraform actually requires quite a bit of boilerplate infrastructure. Luckily, it is quite easy to setup. We start by saving the file above as hello_pubsub.go. Then, we create a Storage bucket:

resource "google_storage_bucket" "functions" {

project = google_project.project.project_id

name = "${google_project.project.project_id}-functions"

}

Storage bucket names are, like project IDs, globally unique, so a little trick I’m using is to always use the project ID as a prefix to the bucket name. That way it’s easy to find the project where the bucket actually exists.

At this point, you may need to associate a billing account with your project in order to continue. After you’ve done so, you also need to add billing_account = "<BILLING ACCOUNT ID>" to your google_project configuration, otherwise Terraform will de-associate your project the next time you apply the rules.

We create the deployable function, by first zipping it locally on your machine, and then automatically uploading it to the Storage bucket:

data "archive_file" "pubsub_trigger" {

type = "zip"

source_file = "${path.module}/hello_pubsub.go"

output_path = "${path.module}/pubsub_trigger.zip"

}

resource "google_storage_bucket_object" "pubsub_trigger" {

bucket = google_storage_bucket.functions.name

name = "pubsub_trigger-${data.archive_file.pubsub_trigger.output_md5}.zip"

source = data.archive_file.pubsub_trigger.output_path

}

You probably need to run

terraform initagain, as we are introducing a new provider to create the ZIP-file (hashicorp/archive).

The archive containing the function source code is now available in the Storage bucket, and we can finally deploy the function itself:

resource "google_project_service" "cloudbuild" {

project = google_project.project.project_id

service = "cloudbuild.googleapis.com"

}

resource "google_project_service" "cloudfunctions" {

project = google_project.project.project_id

service = "cloudfunctions.googleapis.com"

}

resource "google_cloudfunctions_function" "pubsub_trigger" {

project = google_project.project.project_id

name = "hello-pubsub"

region = "us-central1"

entry_point = "HelloPubSub"

runtime = "go113"

source_archive_bucket = google_storage_bucket.functions.name

source_archive_object = google_storage_bucket_object.pubsub_trigger.name

event_trigger {

event_type = "google.pubsub.topic.publish"

resource = google_pubsub_topic.hello.name

}

depends_on = [

google_project_service.cloudbuild,

google_project_service.cloudfunctions,

]

}

Again, we need to enable a couple of services in order to deploy the function. Not only the obvious Cloud Functions, but we also need Cloud Build in order to build the function code. As for the function itself, there are a numbers of things we need to define, and I’ve tried to group them into logical blocks.

First, we define which project the function belongs to, its name, and, as Cloud Functions are regional resources, we also need to specify the region where it will run. If you had defined a default region for your project, the function could’ve inferred it from that, but I prefer to be explicit about it (as you may need to deploy your functions in multiple regions).

We also need to specify the runtime (Go 1.13) and the entrypoint, i.e. which (Go) function to invoke. The entrypoint can actually be inferred as long as you follow some naming rules, but again, this is something that I prefer is explicit.

The source archive properties tells Terraform to use the ZIP file that we uploaded to the Storage bucket earlier. This also means that if we change the source code of the function, re-applying the Terraform rules will automatically re-deploy the function as well.

The event_trigger block is were we configure the function to be triggered by Pub/Sub, and we link the topic we created earlier. If we change the type of trigger here, we change the whole behavior of the Cloud Function, and the expected structure of the code, so this is a very important part of the configuration.

Finally, we help Terraform again by informing it that it needs to enable the services before it tries to deploy the Cloud Function.

At this point, we have a working Cloud Function deployed, but the only way of triggering it, is to publish something to the Pub/Sub topic, which is what we’ll use the Cloud Scheduler for.

Creating a scheduler job

There’s a curious prerequisite before we can use the Cloud Scheduler - we actually have to enable Google App Engine for our project:

resource "google_app_engine_application" "app" {

project = google_project.project.project_id

location_id = "us-central"

}

Then, in a similar fashion as before, we can enable the scheduler service and create the scheduler job itself:

resource "google_project_service" "cloudscheduler" {

project = google_project.project.project_id

service = "cloudscheduler.googleapis.com"

}

resource "google_cloud_scheduler_job" "hello_pubsub_job" {

project = google_project.project.project_id

region = google_cloudfunctions_function.pubsub_trigger.region

name = "hello-pubsub-job"

schedule = "every 10 minutes"

pubsub_target {

topic_name = google_pubsub_topic.hello.id

data = base64encode("Pub/Sub")

}

depends_on = [

google_app_engine_application.app,

google_project_service.cloudscheduler,

]

}

We define the scheduler job in the same region as the Cloud Function, meaning we can easily change the region in one place and simply re-apply the rules without having to search and replace.

We also configure it to run every 10 minutes. For this part you can either use traditional crontab syntax, or you can use the simpler App Engine cron syntax, which we do in our example.

The pubsub_target informs Scheduler that our job should publish to the topic we created, and that the data payload should be the string Pub/Sub. Here you can send pretty much anything you want, as long as your base64 encode it, and your function knows how to parse it.

Making sure it works

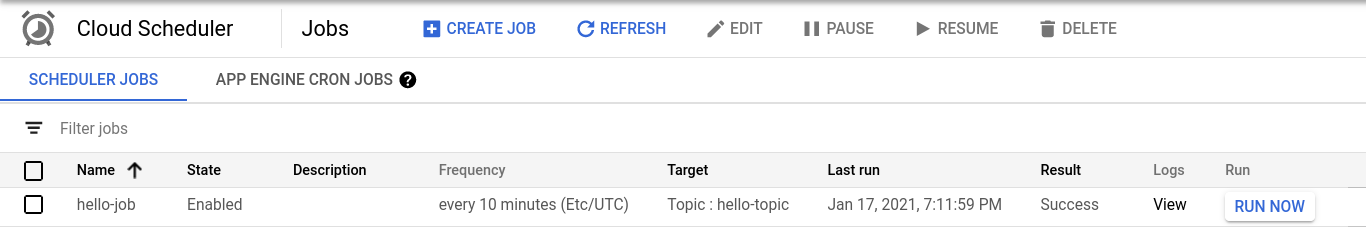

By opening the Cloud Scheduler in the GCP console, we can check that the job has been created, and we can even trigger it manually if we like:

Switching to the Cloud Functions instead, we can check the logs of the function and see that it performs the action we told it to - printing Hello, Pub/Sub!.

Wrapping up

If you’ve followed along the guide, and especially if you’ve connected a billing account, remember that you probably should tear down and delete all resources once you are finished with the project. Otherwise you risk having stuff lying around that may end up costing you money.

The next article in the series will cover scheduling the Cloud Function using HTTP triggers instead.