This is the second part of a three part series about scheduling Google Cloud Functions, using technologies such as Terraform and Cloud Scheduler. If you haven’t read the first part yet, I suggest you have a glance at it, because it will be the foundation for the things I’m setting out to do here.

In the last article we deployed, and scheduled, a Cloud Function using Pub/Sub, which is the quickest, easiest and recommend way of getting it done. In this article, we’re going to remove the Pub/Sub topic and simply have Cloud Scheduler invoke the Cloud Function directly over HTTP.

As I mentioned above, I would argue that using Pub/Sub for scheduling your Cloud Functions is the preferred way. You get security by default (as nothing is exposed directly to the internet), and the general infrastructure and configuration is much easier (as you will see).

So why would you bother using HTTP triggers? I actually don’t have a good answer for that. Perhaps if you needed to be able to invoke the same function both via HTTP and using a schedule? The reason why I’m covering it here, is because it is a necessary ingredient in achieving what I’m setting out to do in the next part of the series - which is triggering Cloud Functions using Pub/Sub across project borders. By breaking it up into three distinct steps, my goal is to make all the information a bit more digestible.

As usual, all source code for this project is available at github.com/hedlund/scheduled-cloud-functions.

HTTP triggered function

If you look at the code in the Github repository, I’m just going to leave the old resources as-is, and create the new infrastructure along side it. Thus I’m reusing the definitions and resources we created in the last article, such as the project, services, storage bucket, etc.

As I mentioned in part 1, the type of trigger that we choose to use defines what the signature of our (Go) function will look like. As we are going to invoke this function using HTTP, we need to write a new function with an HTTP signature:

package hello

import (

"encoding/json"

"log"

"net/http"

)

type HTTPMessage struct {

Name string `json:"name"`

}

func HelloHTTP(w http.ResponseWriter, r *http.Request) {

if r.Method != "POST" {

http.Error(w, "Not allowed", http.StatusMethodNotAllowed)

return

}

var m HTTPMessage

if err := json.NewDecoder(r.Body).Decode(&m); err != nil {

http.Error(w, "Bad request", http.StatusBadRequest)

return

}

log.Printf("Hello, %s!", m.Name)

w.WriteHeader(http.StatusNoContent)

}

Again, the purpose of this article is not to cover the Go specifics (use whatever language you are comfortable with), but basically what we do is check that we get a POST request, parse the request body as JSON, and then log the name within it. Save the file as hello_http.go.

We’re going to deploy this function alongside the Pub/Sub one, so we need to create a new ZIP archive, and upload it to the function bucket:

data "archive_file" "http_trigger" {

type = "zip"

source_file = "${path.module}/hello_http.go"

output_path = "${path.module}/http_trigger.zip"

}

resource "google_storage_bucket_object" "http_trigger" {

bucket = google_storage_bucket.functions.name

name = "http_trigger-${data.archive_file.http_trigger.output_md5}.zip"

source = data.archive_file.http_trigger.output_path

}

Deploying the function is equally straight-forward:

resource "google_cloudfunctions_function" "http_trigger" {

project = google_project.project.project_id

name = "hello-http"

region = "us-central1"

entry_point = "HelloHTTP"

runtime = "go113"

trigger_http = true

source_archive_bucket = google_storage_bucket.functions.name

source_archive_object = google_storage_bucket_object.http_trigger.name

depends_on = [

google_project_service.cloudbuild,

google_project_service.cloudfunctions,

]

}

Compared to the Pub/Sub function we deployed earlier, there is basically only one change (besides referencing a completely different function that is). The event_trigger block has been replaced with trigger_http = true, and that is all that’s needed to make the function an HTTP triggered one.

A few words about access

At this point you may have an HTTP triggered Cloud Function, but you’re not allowed to actually trigger it, as we haven’t configured access yet. This is actually were it gets a bit complicated (not much though), but we’ll manage.

The absolutely easiest way of “solving” the access to the function, is to make it publicly accessible. That means that anyone with the URL to the function can invoke it. If you were writing part of an API of a web service, this may be exactly what you want, but as this is a scheduled function, my bet is that you’d want to limit access to it.

In case you want to make the function publicly accessible, the following code will do the trick:

resource "google_cloudfunctions_function_iam_member" "public" {

project = google_cloudfunctions_function.http_trigger.project

region = google_cloudfunctions_function.http_trigger.region

cloud_function = google_cloudfunctions_function.http_trigger.name

role = "roles/cloudfunctions.invoker"

member = "allUsers"

}

Unless you are absolutely certain that this is what you want to do, I really recommend against making the function public.

So, I’ll assume that we’re not taking the easy (i.e. public) route here, so there are a few things we need to cover. First, whenever you deploy a Cloud Function with the default settings (like we have), your function will actually run as the Google App Engine service account. You can see this by inspecting the details of your function in the console - the account will have an email address looking like YOUR_PROJECT_ID@appspot.gserviceaccount.com.

While it is perfectly possible to configure access using the default service account, I would argue that it is both cleaner and better practice to use a separate service account instead (separation of concern, and principles of least privilege). So let’s create a new service account:

resource "google_service_account" "user" {

project = google_project.project.project_id

account_id = "invocation-user"

display_name = "Invocation Service Account"

}

Then we’re going to configure the Cloud Function to run using the new service account. All you need to do is add a service_account_email parameter, turning the whole block into:

resource "google_cloudfunctions_function" "http_trigger" {

project = google_project.project.project_id

name = "hello-http"

region = "us-central1"

service_account_email = google_service_account.user.email

entry_point = "HelloHTTP"

runtime = "go113"

trigger_http = true

source_archive_bucket = google_storage_bucket.functions.name

source_archive_object = google_storage_bucket_object.http_trigger.name

depends_on = [

google_project_service.cloudbuild,

google_project_service.cloudfunctions,

]

}

Creating, and running with, an explicit service account like this is much better from a security stand-point, as the GAE service account has a scary amount of permissions by default.

If you’re following along and re-apply the Terraform rules, you can see that the function must be completely replaced.

Creating the schedule

Now that we have the new function, we can create a scheduler job to invoke it. In order to do so, we are going to use the same service account as we created above. But even if the function itself is running as the service account, we still need to grant it invoker permissions before we can use it with the scheduler:

resource "google_cloudfunctions_function_iam_member" "invoker" {

project = google_cloudfunctions_function.http_trigger.project

region = google_cloudfunctions_function.http_trigger.region

cloud_function = google_cloudfunctions_function.http_trigger.name

role = "roles/cloudfunctions.invoker"

member = "serviceAccount:${google_service_account.user.email}"

}

As you can see it is almost identical to the public access block earlier, but instead of granting access to everyone we target a specific user.

Creating the scheduler job is then just a matter of:

resource "google_cloud_scheduler_job" "hello_http_job" {

project = google_project.project.project_id

region = google_cloudfunctions_function.http_trigger.region

name = "hello-http-job"

schedule = "every 10 minutes"

http_target {

uri = google_cloudfunctions_function.http_trigger.https_trigger_url

http_method = "POST"

body = base64encode("{\"name\":\"HTTP\"}")

oidc_token {

service_account_email = google_service_account.user.email

}

}

}

Again, this is very similar to the scheduler job we created with Pub/Sub, but the target declaration is different. We tell it to use the HTTPS trigger URL of our function, use a POST request, and encode a small JSON object matching the format our function expects. Finally, we tell it to make authenticated calls using the OIDC token of our service account.

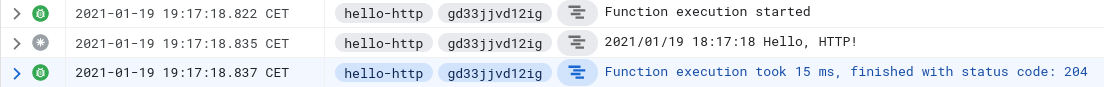

If we open the Logs Explorer and wait until the scheduler kicks in, we an see that our function is invoked as expected:

Wrapping up

As usual, if you’ve followed along the guide, remember to destroy any resources you have created, so you don’t waste any money unnecessarily.

The next, and final, article in the series will cover invoking Cloud Functions using Pub/Sub across project boundaries.